Robust Perception-Based Control

Vision-based sensing systems are becoming increasingly common on robotic platforms, suggesting the need to understand the impact of corresponding measurement errors on the safety of a system. My work has focused on the development of computationally efficient safety-critical control tools that provide robustness to measurement error and can be deployed on real-world robots.

Measurement-Robust Control Barrier Functions: Certainty in Safety with Uncertainty in State

Ryan K. Cosner, Andrew W. Singletary, Andrew J. Taylor, Tamas G. Molnár, Katherine L. Bouman, and Aaron D. Ames, in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 2021, pp.6286-6291.

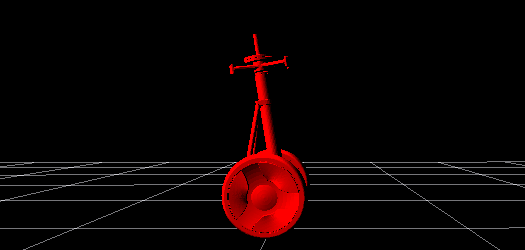

Abstract: The increasing complexity of modern robotic systems and the environments they operate in necessitates the formal consideration of safety in the presence of imperfect measurements. In this paper we propose a rigorous framework for safety-critical control of systems with erroneous state estimates. We develop this framework by leveraging Control Barrier Functions (CBFs) and unifying the method of Backup Sets for synthesizing control invariant sets with robustness requirements—the end result is the synthesis of Measurement-Robust Control Barrier Functions (MR-CBFs). This provides theoretical guarantees on safe behavior in the presence of imperfect measurements and improved robustness over standard CBF approaches. We demonstrate the efficacy of this framework both in simulation and experimentally on a Segway platform using an onboard stereo-vision camera for state estimation.

Guaranteeing Safety of Learned Perception Modules via Measurement-Robust Control Barrier Functions

Sarah Dean, Andrew J. Taylor, Ryan K. Cosner, Benjamin Recht, and Aaron D. Ames, in Proceedings of the 4th Conference on Robotics Learning (CoRL), Boston, MA, USA, 2020.

Best Paper Nominee

Abstract: Modern nonlinear control theory seeks to develop feedback controllers that endow systems with properties such as safety and stability. The guarantees ensured by these controllers often rely on accurate estimates of the system state for determining control actions. In practice, measurement model uncertainty can lead to error in state estimates that degrades these guarantees. In this paper, we seek to unify techniques from control theory and machine learning to synthesize controllers that achieve safety in the presence of measurement model uncertainty. We define the notion of a Measurement-Robust Control Barrier Function (MR-CBF) as a tool for determining safe control inputs when facing measurement model uncertainty. Furthermore, MR-CBFs are used to inform sampling methodologies for learning-based perception systems and quantify tolerable error in the resulting learned models. We demonstrate the efficacy of MR-CBFs in achieving safety with measurement model uncertainty on a simulated Segway system.